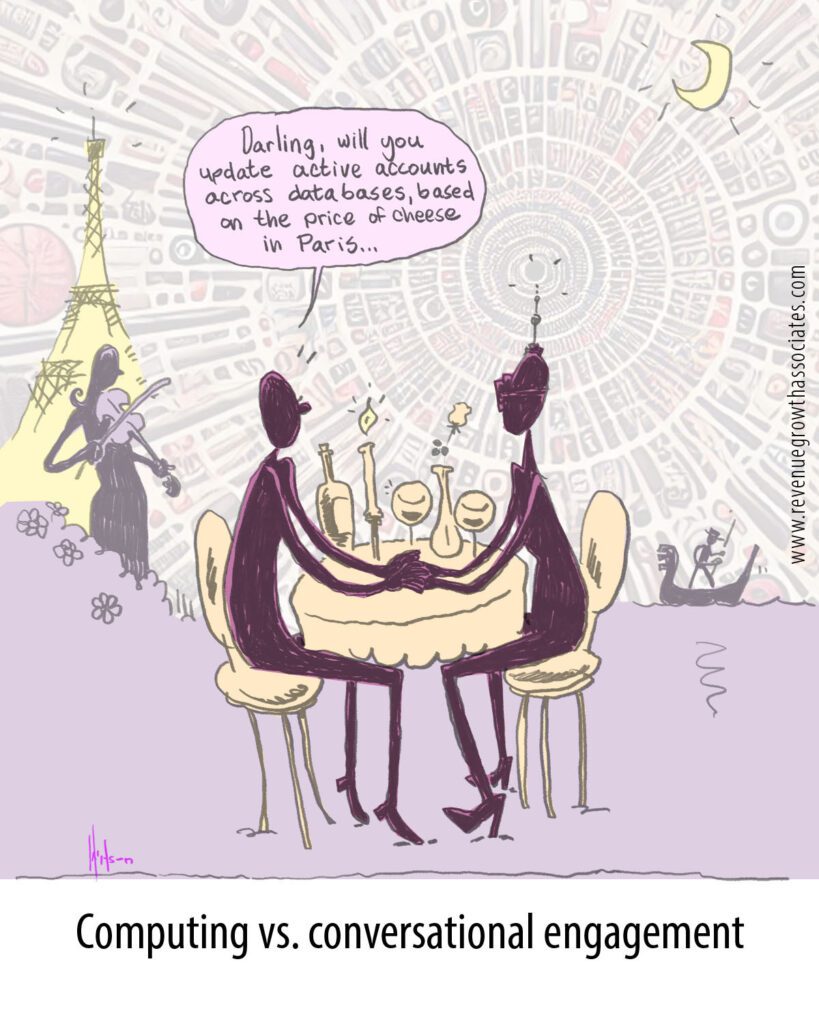

Ever been in a perfect setting with someone special, only to find yourself stumbling over what to say? Awkward, right? Interacting with generative AI can feel the same without the right approach. This is not another deep dive blog on how to prompt a Gen AI Large Language Model. There are already plenty of guides on effective AI prompting structures and techniques being promoted, advocated, and sold. Instead, let’s take a step back and explore the broader implications of a new kind of human-computer interface—one defined by conversational engagement.

Legacy Systems vs. Gen AI

Legacy systems and current computing paradigms have long supported user interaction through predefined paths and system-defined input structures. Users follow structured commands, navigate menus, and fill out forms. The interaction is linear, predictable, and often limited to the capabilities pre-programmed into the system.

The Paradigm Shift with Gen AI

AI changes this. With Gen AI, the primary way of interacting with computers is through conversation. This shift brings about some significant changes:

- Quality of the Question = Quality of the Output:

The quality of the output from an AI largely depends on the quality of the input question. This means that users must invest thought and competence into framing their interactions effectively. AI is a powerful tool, but it shines best when wielded by a capable person who is engaged and thoughtful in their interactions. - The Art of Asking Good Questions:

Asking good questions is both an art and a skill. It requires understanding the context and the desired outcome. A well-posed question can lead to insightful and valuable responses, while poorly framed questions might yield less useful results. This highlights the importance of users developing their skills in engaging with AI conversationally. - Dynamic and Variable Responses:

Just like human conversations, AI responses can vary each time a question is asked. This contrasts with legacy compute interactions, where the output is more predictable and strictly programmed. This variability can be advantageous, providing nuanced insights, but it also requires users to adapt to a more fluid and less predictable mode of interaction. - The Undefined ‘Next Step’:

In traditional computing, the next steps are often clearly defined within workflows and applications. However, when interacting with AI, the subsequent actions based on AI responses are frequently not predefined. This introduces both opportunities and challenges. While it opens up a vast space for creative and innovative uses of AI, it also demands robust workflow management to harness AI’s full potential effectively. At present, this area remains somewhat uncharted, embodying the ‘wild west’ nature of current AI engagement.

Experimentation and Best Practices in Prompting

Within an enterprise, users across multiple roles should be experimenting with prompts that align with their roles and the tasks they perform. Identifying the most effective prompts takes some trial and error, and what works best today may need to be updated in the future based on the underlying model being prompted. This iterative process is crucial for maximizing the value derived from AI interactions.

- Best Practice: Formal Structure for Prompt Collection:

Setting up a formal structure for collecting prompts created by users is a best practice. Capturing these prompts in a way that supports reuse and refinement ensures that valuable insights are not lost and can be shared across the organization. Consider creating a centralized repository where users can submit and access effective prompts. - Cataloging Prompts by Function, Role, and Task:

To further enhance the usability and effectiveness of prompts, catalog them by function, role, and task. This allows for better mapping into both current and future workflows. By categorizing prompts this way, enterprises can create a comprehensive database that users can easily navigate to find relevant prompts for their specific needs. - Creating Playbooks for Key Outcomes:

Consider developing playbooks for key outcomes desired by various roles or functions within the organization. These playbooks can showcase how to use effective prompts to access the information, insights, or guidance necessary for executing specific functions, roles, or tasks. A well-crafted playbook serves as a valuable resource, providing step-by-step instructions and best practices for leveraging AI. - Prompts Beyond Direct Interaction:

It’s important to note that prompts are used by more than just individuals interacting directly with an LLM. IT departments and developers are also creating prompts to embed in AI-driven applications they are building. These embedded prompts enhance the functionality and user experience of AI applications, making them more intuitive and responsive to user needs. - Gamification to Encourage Innovation:

Gamification can be an effective way to encourage people across functions to come up with impactful prompts. By defining criteria for ‘great prompts’—such as clarity, effectiveness, and alignment with strategic goals—and rewarding users who develop the best prompts, enterprises can foster a culture of continuous improvement and innovation.

Gamification can be an effective way to encourage people across functions to come up with impactful prompts. By defining criteria for ‘great prompts’—such as clarity, effectiveness, and alignment with strategic goals—and rewarding users who develop the best prompts, enterprises can foster a culture of continuous improvement and innovation.

Embracing the New Human-Computer Interface

Embracing this new conversational interface with AI requires a mindset shift. It is not just about mastering the technical aspects of AI but also about enhancing one’s conversational skills to engage effectively with these systems. Here are a few strategies to navigate this transition:

- Develop Conversational Competence: Invest time in learning how to frame questions effectively. Understand the context and desired outcomes before interacting with AI.

- Iterative Engagement: Treat interactions with AI as an iterative process. Refine your questions based on the responses you receive to get closer to the desired outcome.

- Workflow Integration: Work towards integrating AI responses into your workflow. This might involve developing new workflow management strategies that can handle the dynamic and often undefined nature of AI interactions.

- Continuous Learning: Stay updated with the latest developments in AI and conversational interfaces. This will help you adapt to new features and capabilities as they emerge.

- Role-Specific Prompting: Encourage users across different roles to experiment with prompts tailored to their specific tasks and responsibilities. This targeted approach can lead to more effective and relevant AI interactions.

- Centralized Prompt Repository: Establish a formal structure for collecting and sharing effective prompts. This repository should be easily accessible and regularly updated to reflect the latest best practices.

- Incentivize Innovation: Use gamification to motivate employees to develop and share impactful prompts. Recognize and reward those who contribute the most effective solutions.

The shift from predefined, system-driven interactions to dynamic, conversational engagement with Gen AI represents a major change in how we interact with computers. By developing the skills to ask the right questions, embracing the variability in AI responses, and integrating AI effectively into workflows, enterprises now have an ability to think about ‘how work gets done’ differently. As we move forward, the ability to talk to AI effectively will become an essential skill moving forward, opening up new paths to innovation and productivity – and of course paths to new opportunity for all those in the workforce wondering what AI will mean for them.

Ready to help your organization talk to AI effectively? We’d love to talk to you. Human to human in this case!